Data Load (ETL) Process using Azure Functions

Azure Functions

are serverless and are a great solution for processing data, integrating systems, working with the internet-of-things (IoT), and building simple APIs and microservices. Consider Functions for tasks like image or order processing, file maintenance, or for any tasks that you want to run on a schedule.

Here we are talking about ETL process implementation using Azure Functions, even though Azure Data Factory is out there but if you are a c# developer you will love it. You can leverage all the benefits of the App Service Plan and/or Consumption Plan(Pay As you Go) along with Event-Driven Process and Programming Model

Azure Durable Function

Durable Function is an extension of Azure Function that lets you write stateful functions in a serverless compute environment. It allows you to define stateful workflows by writing orchestrator functions and stateful entities by writing entity/Activity functions using the Azure Functions programming model. All other things like state management, checkpoints, and restarts for you, will be taken care of by azure durable function engine and allowing you to focus on your business logic.

The primary requirement is the reader should be familiar with Azure function and durable functions

Business Requirement

We have CSV file dropped into the azure blob storage/container and that file should be process and data saved into the Azure SQL Server.

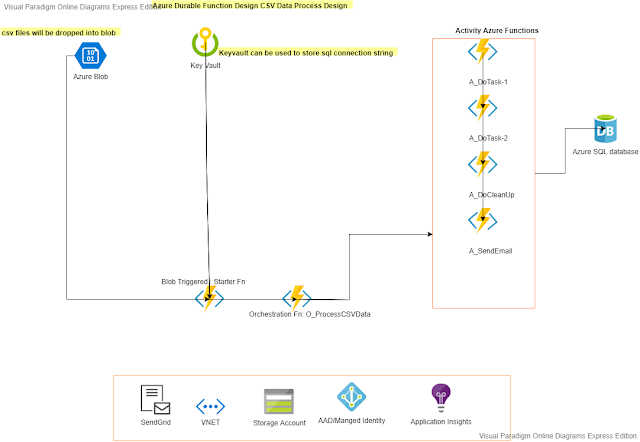

Design and Architecture

- Azure Function: It's a blob trigger function and starter for the data load process.

- Azure Durable Functions - Orchestrator: It's an orchestrator function that will manage the workflow/data flow activities function and all the executions

- Azure Durable Functions - Activity: An azure function that will actually process the CSV data and will insert into the azure SQL database

- Azure SQL Server: will be in used to keep processed data

- SendGrid: will be used to send emails and acknowledgment on process completion

- Application Insights: can be used to logging the exception event etc..

Development Environment Setup:

- Visit MSDN for the step by step example here is a link

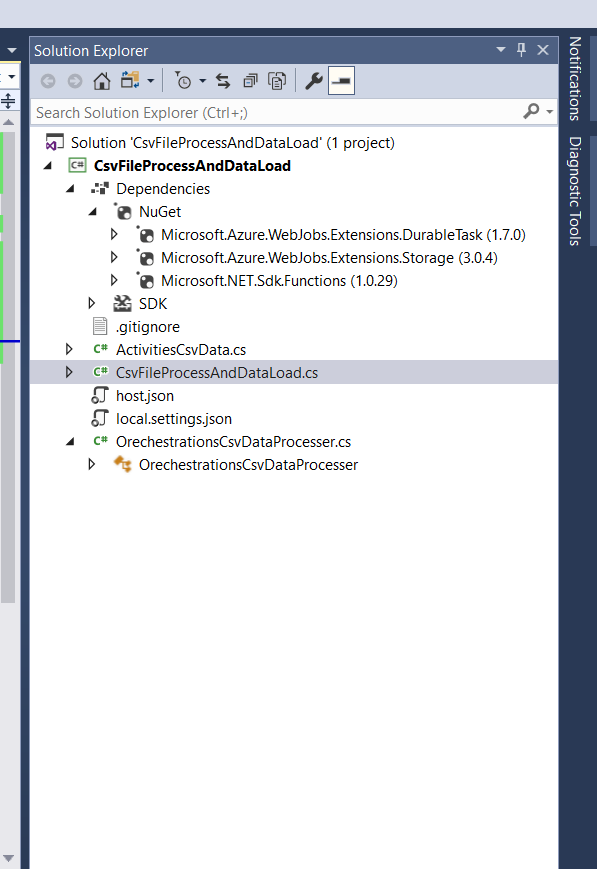

- Required NuGet package Microsoft.Azure.WebJobs.Extensions.DurableTask

Code and Example :

Here is a list of code screenshots

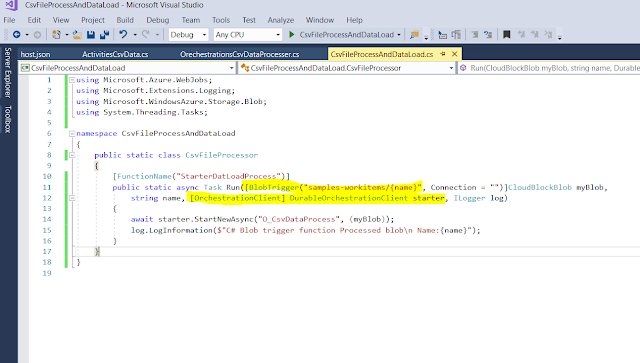

Start Function: its a blob trigger azure function that will execute automatically once any CSV file will be dropped into the container "samples-workitems"

Orchestrator Function :

it will manage the life cycle of data workflow

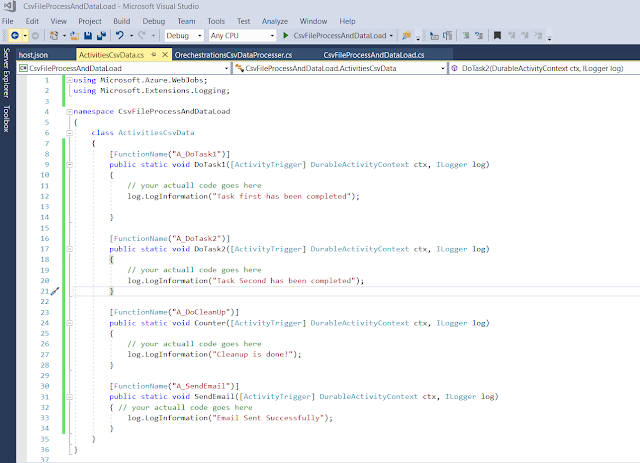

Activity Functions

perform actual data manipulation and communication with the database.

Solutions and NuGet pkg:

Bonus Points:

- Use Cunsputions Plans only if you are sure that you function execution time will no exceed 10minutes limit

- Use App Service Plan if need to configure vnet andother securtity and if your function will need more than 10 minutes to complete the task just you need to configure function time out in host.json

- Visit for more Application Patterns

Comments

Post a Comment